Join getAbstract to access the summary!

Join getAbstract to access the summary!

Cade Metz

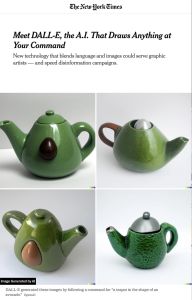

Meet DALL-E, the A.I. That Draws Anything at Your Command

New technology that blends language and images could serve graphic artists – and speed disinformation campaigns.

The New York Times, 2022

What's inside?

Is OpenAI too dangerous for the internet?

Recommendation

Microsoft has invested a billion dollars in OpenAI and created DALL-E, a program that combines image and text analysis to generate realistic images based on verbal commands. This is the latest in neural network capabilities – systems that filter vast amounts of data to identify and classify images. While this application could be a boon to graphic designers and digital assistant developers, it’s also potentially dangerous in the wrong hands. Disinformation and “deep fakes” already exist on the internet, which DALL-E could exacerbate. It’s not yet on the market and is only available to researchers – for now.

Summary

About the Author

Cade Metz is a technology correspondent with The New York Times. He covers artificial intelligence, driverless cars, robotics, virtual reality and other emerging technologies. He is the author of Genius Makers: The Mavericks Who Brought AI to Google, Facebook, and the World.

Comment on this summary